This was my final project for PSYC 3501: Complex Systems course.

The class focused on the study of complexity theory, which attempts to answer “how does organized behavior arise from independent and interacting units?”. Neural networks are a great example of how a simple rule for training a network can descend the error gradient through training and estimating the underlying function driving the system.

Generating Crude Weather Predictions with Rust and Backpropagation

The goal of this project was to attempt to model tomorrows weather based on today’s conditions. This was obviously going to give bad results because climate models are insanely complicated and this is only running on my computer, but I was curious what was possible.

Parameters and Dataset

I retrieved a dataset of the past 52 years worth of daily weather summaries from my hometown Niskayuna, New York (about 17,500 records after cleaning).

The parameters that were used in training were the day of the year (1-365), max day temperature (°C), minimum day temperature (°C), temperature at reading, dew point, humidity (millibars), precipitation (cm), wind speed (km/h), wind direction (degrees 0-360), cloud cover (%), sea level pressure (millibars), visibility (km), and the current conditions (clear, partially cloudy, overcast, snow, rain, freezing drizzle/freezing rain, ice).

The weather conditions (clear, cloudy, sunny, etc.) were represented as a binary array where if there were multiple weather conditions occurring in one day, the inputs representing those conditions would be on while the rest would be off.

a simplified example: (sunny, cloudy, rainy) == (0, 1, 1) would represent a cloudy and rainy day.

This was also how the neural network would output its results.

The way the training data was created was by using the current weather entry’s parameters as the input and then looking at the next day’s weather conditions (rain, snow, etc) and using that as the expected output. Test cases were also shuffled between each epoch.

Activation function

The neuron activation function used was the sigmoid. Biases were initialized to 0.0 for all neurons while weights were randomly generated in a range of (-2.95, 2.95) which is in the range of (0.05, 0.95) of the sigmoid. I picked a larger range instead of (-1, 1) because the network seemed to have trouble reaching activations on both extremes and random initialization could help.

Discussion

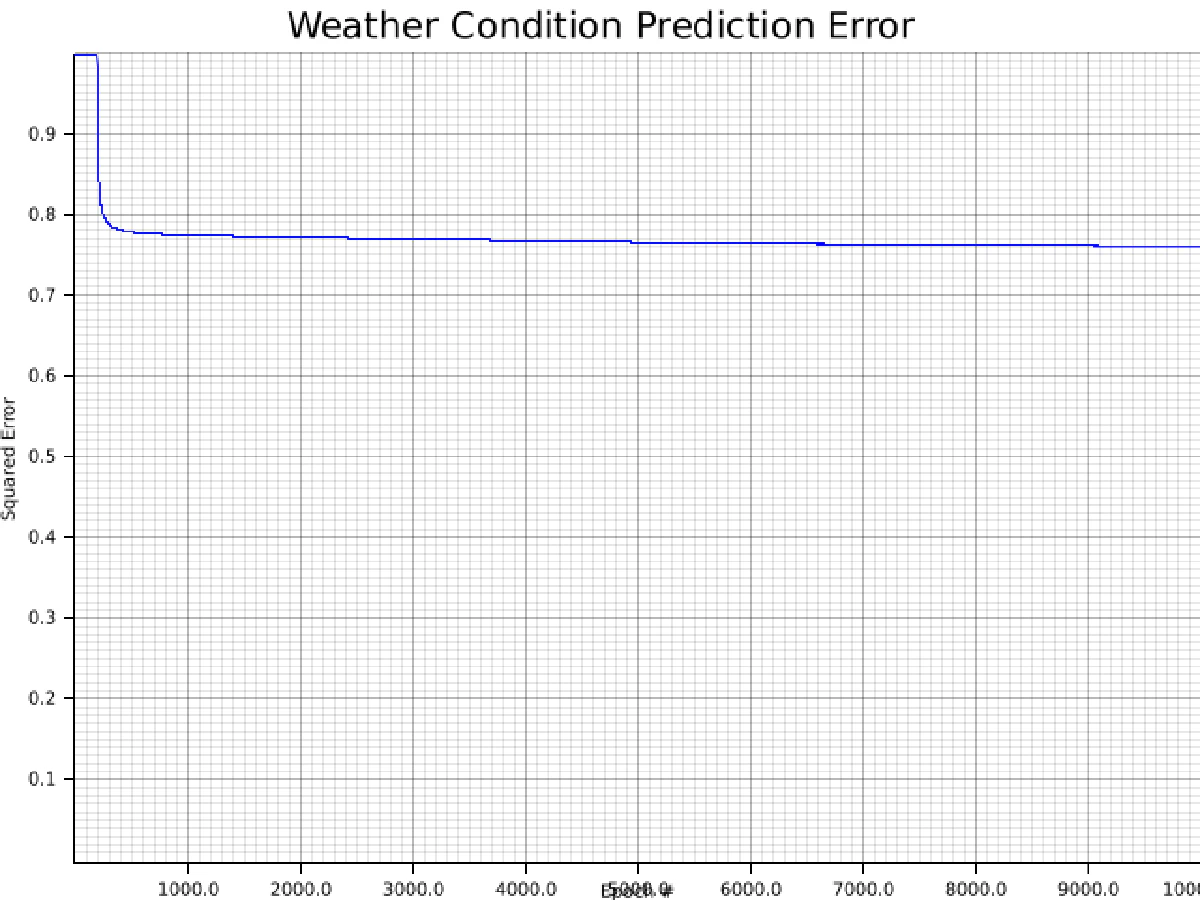

There was supposed to be an analysis of epochs up to 100,000, as well as testing different network sizes. I was clearly unaware of how long it would take to train the network and won’t be able to include more training. The main goal of the neural network was to successfully traverse the gradient, which seems to be confirmed by the squared errors over time since it is monotonically decreasing. This was also verified by testing XOR and a multilayered OR and AND training. The issue of speed could be significantly improved by implementing multi-threading, which I attempted, but could not finish in time. It has the potential to give a speed up of N times (where N is the number of cores on your computer). This would have made the 100,000 epoch case a lot more feasible.

One thing I noticed was that as you reached the effective minimum error, the network would get stuck and no longer be monotonically decreasing, at least about the error and with the size of the learning rate.

Additional features

Additional features of the neural network are the ability to save and re-load the neural network’s parameters in case you would like to stop training early or a crash occurs. However it does not save the training error over time in case you would like to resume, which I would like to implement in the future. And in the future, I would like to finish implementing the multi-threaded training. pted to model

Credits

My neural network was inspired by jackm321’s project.

Backpropagation Neural Network

Inducted May 3, 2022

A Rust implementation of a backpropagation neural network