AI is overrated

Hopefully our future machine overlords don’t see this…

September 17, 2021

You know, I have a lot of problems with AI. It’s nothing personal, it’s just AI sucks. It sucks in more ways than I can count, and for all the hype that it gets, I can give you several reasons why to avoid it like the plague. Call me a Luddite, but you won’t catch me using TensorFlow any time soon.

The ethics of AI have been discussed endlessly already, and I won’t beat a dead horse, but if there is anything I should make clear: decisions have consequences, good ones and bad ones. Whether or not AI should be used to solve a problem is entirely dependent on whether or not the associated risks are acceptable, and as soon as real life people are affected by an AI’s decisions, things get messy real fast.

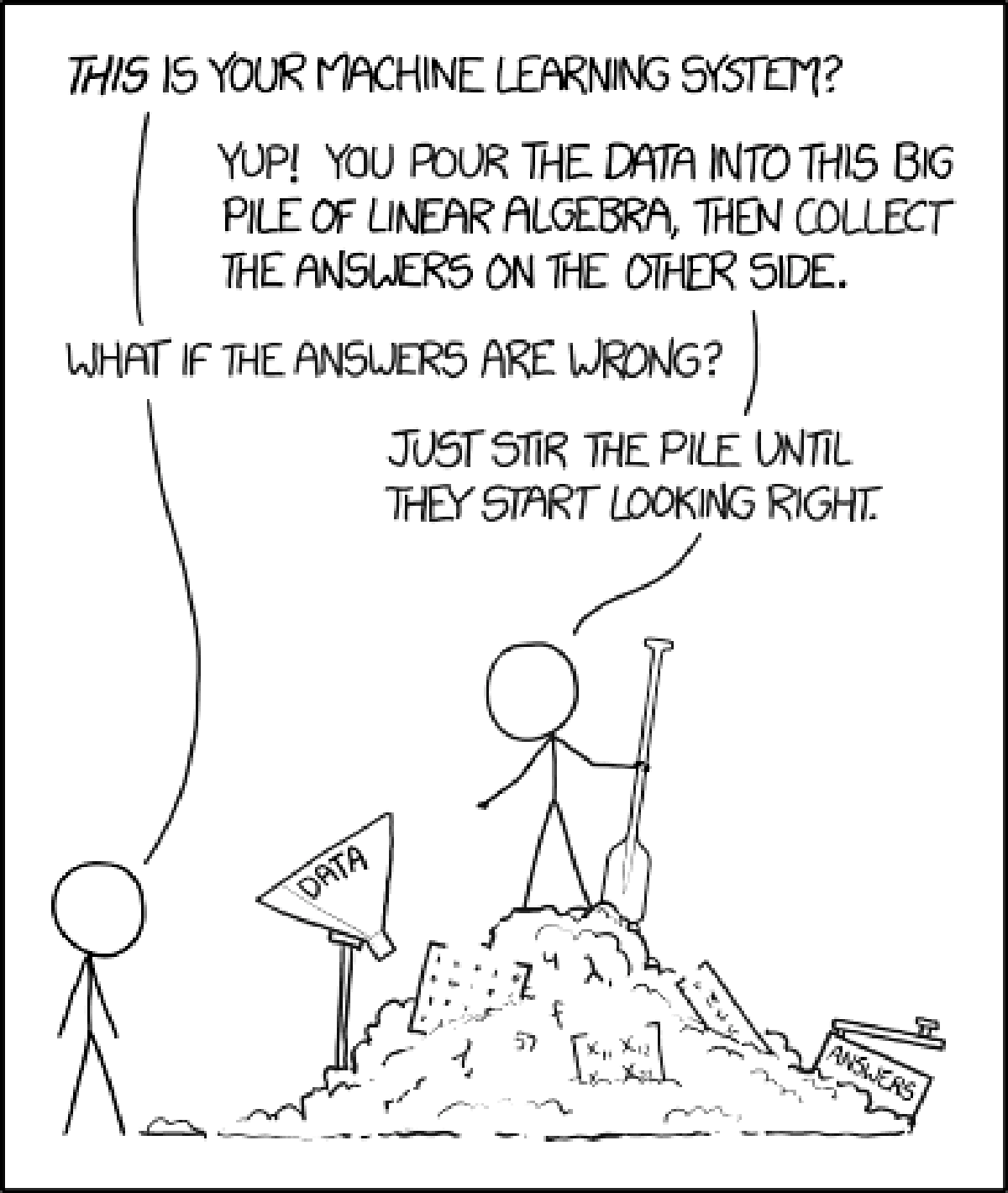

For the uninitiated, here is the oversimplified explanation of how AI and machine learning works. In general, AI is an optimization machine that tweaks coefficients of a model so that when given a set of inputs, the outputs resemble what is expected or desired. Similar to the way that you can create a line of best fit that minimizes the amount of error in a data set, an AI does this on a massive scale and with more complex methods.

What people don’t realize about AI is that it’s not a magic bullet for every problem. What frustrates me to no end is when people are brainstorming solutions to a problem and the first suggested method, without fail, is to “just train an AI”. If only it were that simple.

1. Lack of transparency

The biggest drawback of artificial intelligence today is the lack of transparency. You plug in an input and the magic black box produces an output. Whether or not that output is actually accurate is unknowable. A lot of research is being done into trying to understand how AI models make decisions, but so far there hasn’t been much luck. These models learn from experience in the same way we do, by classifying and identifying patterns from repeated exposure. As people, we gain an intuition of how something is done and often aren’t able to explain how we do it. AI is similar in that respect where the model’s thought process can’t be reconstructed into specific steps that we can understand and follow. All the model sees are the connections and weights associated with a decision that it makes. If we can’t even explain something to each other, what hope does a machine have?

2. Big Data isn’t Good Data

A significant part of developing any kind of model is collecting data to build it. There is all this talk about how Big Data is going to change everything. The more data, the better, right?

Wrong. I can’t remember which podcast I heard this on, but a data scientist made a very good point that it’s not the quantity of data that’s important, but the quality. If I’m developing a self driving car and all the video footage is recorded on cameras from 2005 and I improperly label every traffic light, that’s a recipe for disaster. If you feed a machine learning algorithm garbage data, you are going to get garbage answers out.

3. Extrapolating from previous data

Any person who has taken a statistics course could tell you that when extrapolating from a given data set, there are no guarantees of its accuracy. AI only further complicates things because there can be hundreds or thousands of different parameters that are taken into account, so the boundary between the interpolation and extrapolation of data is extremely fuzzy. If you give a model trained on pictures of dogs, what is it going to do when it sees a cow for the first time?

4. Losing the underlying meaning of complicated systems

Sometimes I feel like AI is seen as a shortcut for dealing with difficult problems. Stuck on how to solve this problem? Just AI it away! This creates a layer of abstraction between the developer and the problem where you lose sight of how the system behaves. To explain what I mean, imagine feeding an AI sets of physics data about the universe and then retrieving outputs for some starting conditions. Does the AI really understand how the universe works? Does the programmer gain any understanding of the laws of physics, or does the AI just mimic the patterns that exist?

To “AI a problem away” means you have no clue how anything works. And in some cases, we really are left with no better options. AI is an approximation of reality, but one that does not tell you how it approximates it. That physics example from earlier was actually done by physicists very recently, and surprise, surprise, they have no idea why their physics simulation works so well.

Conclusion

With all this said, this doesn’t mean that we shouldn’t use AI. All models are wrong, but some are useful, as eloquently stated by George Box. It’s important to use what tools we have available to solve difficult problems, but using AI is like playing with fire. It has the potential to be extremely useful, but only when used in the right situation, which is only when you have no options left.

We are human, and we make mistakes, and those mistakes can cost us, and it seems that on our quest for the perfection of reasoning, the more we realize that our imperfect humanity is being instilled in the tangled, infant brains of AI.

The question that ultimately needs to be asked is, does the (supposed) answer justify the means?

1. Bathaee, Yavar. “The artificial intelligence black box and the failure of intent and causation.” Harv. JL & Tech. 31 (2017): 889.

2. https://www.nytimes.com/2020/01/12/technology/facial-recognition-police.html

3. https://www.csis.org/blogs/technology-policy-blog/problem-bias-facial-recognition