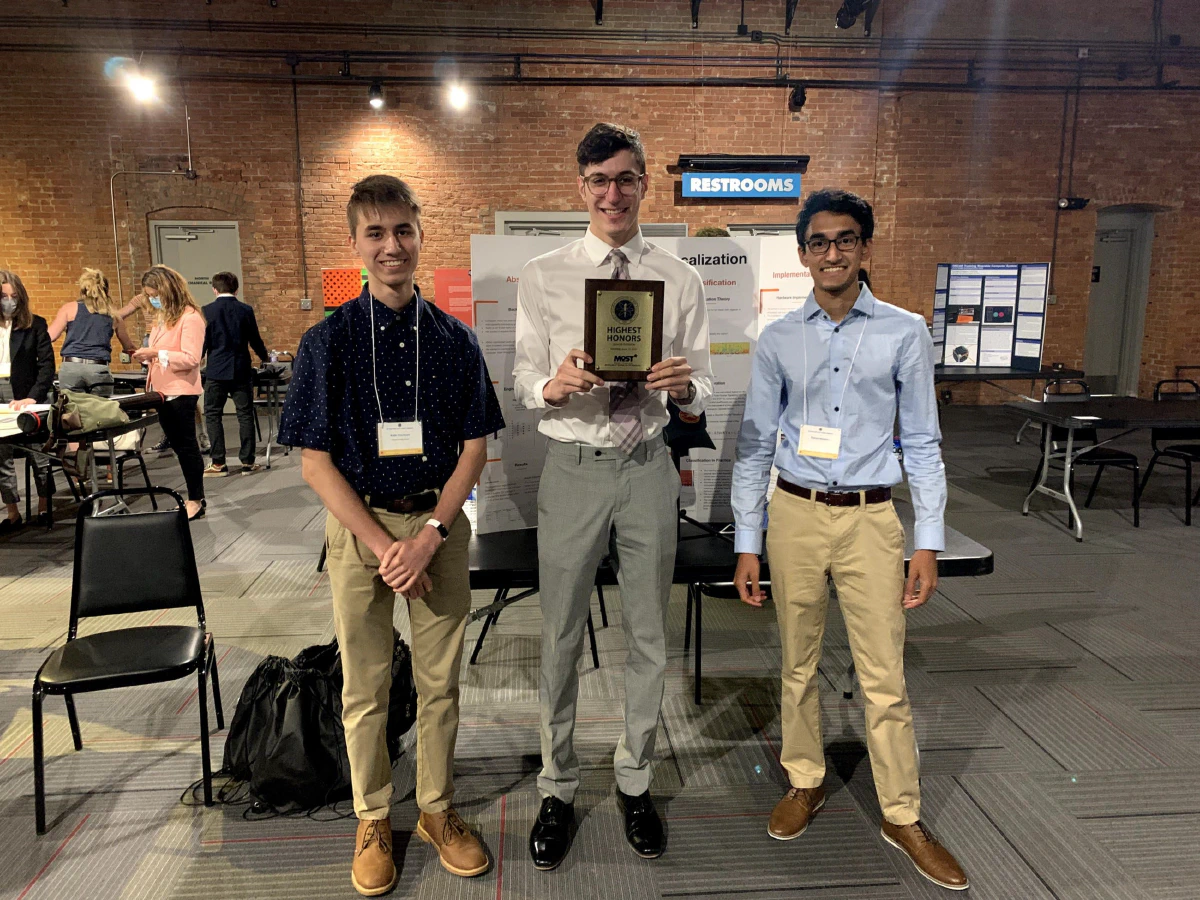

Resonant started as a high school science fair project with two of my friends, Rohan Menon and Jacob Yanoff. Our hope was to develop a wearable, and discreet, device that could serve as an alternative to hearing aids for the deaf. Additionally, the technology has interesting applications in spatial perception for robotics. Our project won Highest Honors at the New York State STANYS Science Fair.

Specifications

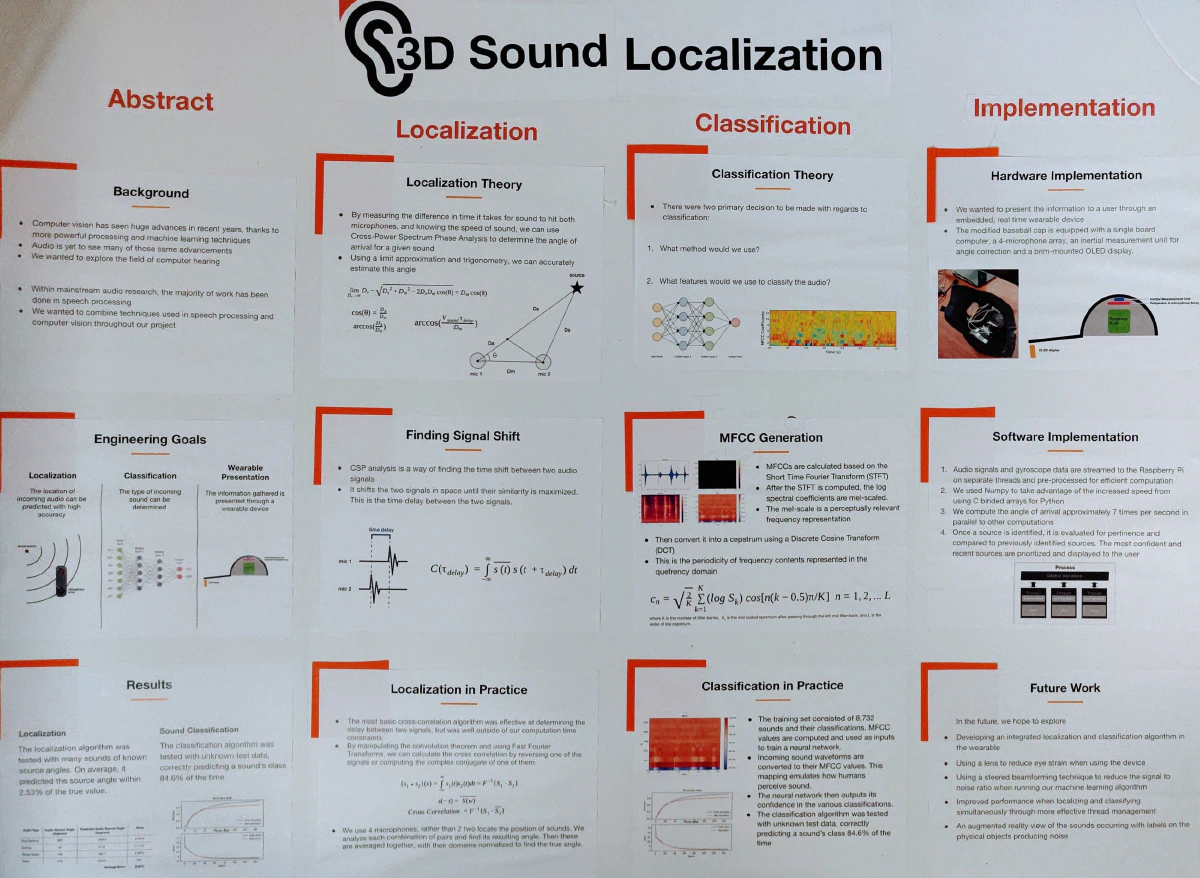

The wearable has fully integrated computing and sensing. Inside of the baseball cap there is an array of four microphones, an inertial measurement unit (IMU), a Raspberry Pi Compute unit, an OLED display at the brim of the hat, and finally a battery. On the software stack we built our prototype using Python and Numpy. Preferably we would have used a systems programming language, but since we were on such a tight deadline we settled for this solution. Communication was handled through I2C lines and multithreading.

Math

The mathematics behind sound localization are extremely interesting and elegant. It was mind blowing to me how such a simple equation could be so effective at producing a real world result. We were concerned that this project was infeasible, yet even with non-ideal conditions (a stereo microphone on a laptop) we saw accurate results! $$\theta = \arccos\left(\frac{v_s \tau}{d}\right)$$ $v_s$ is the speed of sound, $\tau$ is the time delay between two audio signals, and $d$ is the distance between a pair of microphones.

Granted, this equation isn’t the full story. It’s limited to localization in 2D and a lot of additional signal processing is required to compute the time delay, like computing cross-correlation with the fast Fourier transform.

What’s next?

Resonant was the first software and hardware project I worked on. It has been, by far, my favorite to work on, which is why I’m excited to have the opportunity to research this more extensively in college! There is a lot of room for improvement for our device in almost every aspect, machine learning, localization, hardware, and software. There is so much potential and things left to learn and explore with this technology. We plan to focus a lot on improving the algorithm since a lot of research in this area went over our heads in high school. Additionally, we’d like to explore the usage of sound localization as a sensing platform for robots.

Areas of interest

- Multi-source localization in 3D space

- Source tracking with Kalman filters

- Audio beamforming for selective reception

- Noise-resistant machine learning model for classification and speech transcription

- Custom microphone array

- Systems programming language (like C++/Rust)

Resonant

Inducted February 10, 2021

An exploration of audio localization and identification through accessibility and sensing projects